Timeboxing

The strategy for test design presented is my book is to budget test design time for each use case based on an operational profile: an analysis of the frequency of use of each use case. After all, frequency of use is opportunity for failure: the more use, the more opportunities for the user to find defects.The idea of budgeting a fixed time for some task is called timeboxing. Timeboxing is a planning strategy often associated with iterative software development in which the duration of a task (design tests for a use case) is fixed, forcing hard decisions to be made about what scope (number and rigor of tests) can be delivered in the allotted time. What’s new with the approach here is that budgeted times are based on an operational profile.

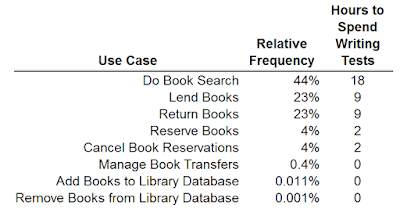

To illustrate, say you’ve been given a week to write test cases from the use cases of the library system (see last two posts). During that week you estimate your team will be able to spend about 40 staff hours. To get an idea of how best to budget the teams’ time, you construct a quick spreadsheet using the relative frequencies from the operational profile (again, see last two posts). Results are shown below; hours budgeted (right column) have been rounded to the nearest whole hour.

|

| Allocating 40 hours of test design based on the operational profile |

Alternatively you may decide you really want some tests designed for each use case regardless of how little they are used. The figure below illustrates budgeting the 40 hours of test design to strike a balance between the spirit of the operational profile and yet having some time for test design for all use cases. And I think it is the spirit of what the operational profile is showing us that is important for planning. That combined with some common sense will lead to a better allocation of time.

|

|

Reallocation of 40 hours honoring the spirit of the

operational profile, yet still making sure each use case has at least a minimal

amount of test design.

|

Timeboxing, Use Case Levels of Test, and Test Rigor

|

| The four use case levels of test |

For use cases for which many hours have been budgeted, this strategy will produce tests at all levels, 2-4. And at any given level, when the option is available to increase or decrease rigor, rigor could be increased.[1] Tests at more, deeper levels, with increased rigor, will translate to more tests, with greater detail, and ultimately more test execution time for frequently used use cases.

For use cases for which few hours have been budgeted, test design may not progress deeper than level 2 (use case) or 3 (single scenario) before time is up. And at any given level, less rigor is likely in order to accommodate for less budgeted time. Tests at fewer levels with less rigor will translate to fewer, coarser granularity tests for use cases used infrequently.

No comments:

Post a Comment