|

| Book is organized around four use case levels of test |

Four Use Case Levels of Test

In my book, Use Case Levels of Test, I like to use the analogy of the “View from 30,000 feet” to illustrate the role of use case levels to zoom from the big-picture (the major interstate highways through your application) down to discrete operations that form the steps of a use case scenario. These use case levels of test provide a sort of road map on how to approach test design for a system, working top-down from the 30,000-foot view to ground level.

The four parts of the book are organized around these four levels of use case test design. The bit of pseudo code below illustrates a strategy for using the parts of the book.

Apply Part I to the Use Case Diagram. This produces a test adequate set

of prioritized use cases with budgeted test design time for each, as well as

integration tests for the set

For each use case U from the previous step, until budgeted time for U expires do:

·

Apply Part

II to use case U to produce a set of tests to cover its scenarios

·

For each test T

from the previous step, in

priority order of the scenario T covers do:

o Apply

Part III to test T creating

additional tests based on inputs and combinations of inputs

o Apply

Part IV to any critical operations

in test T producing additional tests based on preconditions, postconditions and

invariants

Timeboxing, Operational Profile, and Use Case Levels of Test

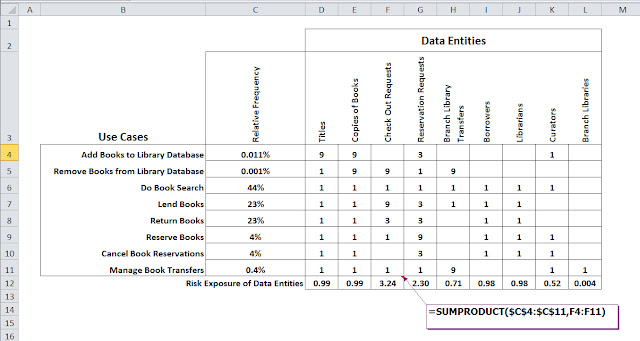

Recall from a previous post that this book is organized around a strategy of budgeting test design time for each use case, often referred to in planning as timeboxing. But rather than an arbitrary allocation of time (say equal time for all), time is budgeted based on an operational profile: an analysis of the high traffic paths through your system.

Because parts II-IV are timeboxed according to the

operational profile (notice the phrase “until budgeted time expires”) this

strategy will produce more tests for use cases frequently used. This is the key

contribution from the field of software reliability engineering to maximize

quality while minimizing testing costs.

This strategy is graphically depicted below.

Numbers refer to the parts – 1 through 4 --

of the book.

|

| Strategy for budgeting test design time to maximize

quality while minimizing testing costs |

Let’s walk through each part of the book and see what is covered.

Part I The Use Case Diagram

Part I of the book starts with the view of the system from

30,000 feet: the use case diagram. At this level the system is

a set of actors, interacting with a system,

described as set of use cases. Paths through the system are in terms of traffic trod across a whole set of use cases, those

belonging to the use case diagram

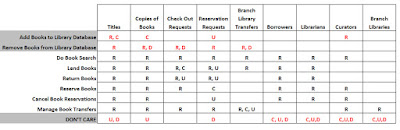

As tests at lower levels will be based on these use cases, it

makes sense to begin by asking: Is the use case diagram missing any use cases essential for adequate

testing? This is addressed in Chapter 1

where we look at the use of a C.R.U.D.

matrix, a tool allowing you to judge

test adequacy by how well the use cases of the use case

diagram cover the data life-cycle of entities in your system. Not only does the C.R.U.D.

matrix test the adequacy of the use case diagram, it is essentially a high

level test case for the entire system, providing expected inputs (read), and

outputs (create, update, delete) for the entire system in a compact succinct

form.

Still working at the use case diagram level, in Chapter 2

we look at another tool – the operational profile – as a way to help you, the tester,

concentrate on the most frequently used use cases, and hence those having a

greater chance of failure in the

hands of the user. The chapter describes how to construct an operational

profile from the use case diagram, and the use of an operational profile as a way

to prioritize use cases; budget test design time per use case; spot “high risk” data; and design

load and stress tests.

Chapter 3

wraps up the look at testing at the use case diagram level by introducing techniques for testing the use cases in concert, i.e.

integration testing of the use cases.

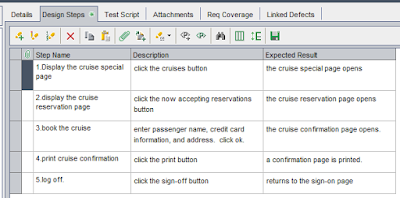

Part II The

Use Case

Part II drops down to the 20,000-foot view where we have

the individual use case itself. At this level of test paths through the system are in terms of paths through an individual

use case.

In Chapter 4

the book looks at control flow graphs, a graph oriented approach to

designing tests based on modeling paths through a use case. For use case based

testing, control flow graphs have a lot going for them: easy to learn, work

nicely with risk driven testing, provide a way to

estimate number of needed tests, and can also be re-used for design of load

tests.

Chapter 5

looks at two alternate techniques for working with use cases at this level of

test: decision tables and pairwise testing. A decision table is a table showing combinations of inputs with

their associated outputs and/or actions (effects): briefly, each row of the

decision table describes a separate path through the use case.

For some use cases any combination of input values (or most)

is valid and describes a path through the use case. In such cases, the number

of paths becomes prohibitive to describe, much less test! Chapter 5

concludes with a technique to address such use cases: pairwise testing.

Part III A Single Scenario

In this part of the book we arrive at the 10,000-foot view

and test design will focus on a single scenario of a use case. While a scenario represents a single path through the system

from a black-box perspective, at the code level different inputs to the same scenario

likely cause the code to execute differently.

Chapter 6 looks at test design from scenario inputs in the hope that we are

more fully testing the paths through the actual code, looking at the most

widely discussed topics in input testing: error guessing, random input testing, equivalence partitioning,

and boundary value analysis.

For a use case scenario with more than a single input, after

selecting test inputs for each separate input there’s the question of how to

test the inputs in combination. Chapter 6 concludes with ideas for “working

smart” in striking the balance between rigor and practicality for testing

inputs in combination.

Chapter 7 looks at additional (and a bit more advanced) techniques

for describing inputs and how they are used to do equivalence partitioning and boundary value analysis testing. The chapter begins with a look at syntax

diagrams, which will look very familiar as they re-use directed graphs (which you’ll have already seen used as control

flow graphs in Chapter 4).

Regular expressions are cousins of syntax diagrams, though not

graphic (visual) in nature. While much has been written about regular

expressions, a goal in Chapter 7 is to

make a bit more specific how regular expressions relate to equivalence

partitioning and boundary value analysis. A point that doesn’t always seem to come

across in discussing the use of regular expressions in test design.

For the adventuresome at heart the last technique discussed

in Chapter 7 is recursive definitions and will include examples written in Prolog (Programming in Logic). Recursion is one of

those topics that people are sometimes intimidated by. But it is truly a

Swiss-army knife for defining, partitioning and doing boundary value analysis of all types of inputs (and outputs) be they

numeric in nature, sets of things, Boolean or syntactic.

Part IV Operations

In Part IV, the final part of the book, we arrive at “ground

level”: test design from the individual operations of a use case

scenario. A use case describes the behavior of an application or

system as a sequence of steps, some of which result in the invocation

of an operation in the system. The operation is the finest level of granularity

for which we’ll be looking at test design. Just as varying inputs may cause execution of different code paths through a single use case scenario,

other factors – e.g. violated preconditions – may cause execution of different code paths

through a single operation.

In Chapter 8 Preconditions, Postconditions and Invariants:

Thinking About How Operations Fail, we’ll look at specifying the expected

behavior of abstract data types and objects – model-based specification – and

apply it to use case failure analysis: the analysis of potential ways a use

case operation might fail. In doing so, the reader will learn some things about

preconditions and postconditions they forgot to mention in “Use Case 101”!

You may find Chapter 8

the most challenging in the book as it involves lightweight formal methods to

systematically calculate

preconditions as a technique for failure analysis. If prior to reading this

book your only exposure to preconditions and postconditions has been via the

use case literature, this chapter may be a bit like, as they say, “drinking

from a fire hose”. For the reader not

interested in diving this deep, the chapter concludes with a section titled The Least You Need to Know About

Preconditions, Postconditions and Invariants,

providing one fundamental lesson and three simple rules that the reader can

use on absolutely any use case anytime.

Having gained some insight into the true relationship between preconditions, postconditions and invariants in Chapter 8, Chapter 9 provides “lower-tech” ways to identify preconditions that could lead to the failure of an operation. We’ll look at ways to use models built from sets and relations (i.e. discrete math for testers) to help spot preconditions without actually going through the formalization of calculating the precondition. More generally we’ll find that these models are a good way to brainstorm tests for use cases at the operation level.

In the last post I talked about how to apply the concept of risk exposure to the data of your system or application. The risk exposure of an event is the likelihood that the event will

actually happen, multiplied by the expected severity of the event. Each time your customer runs a use

case, there is some chance that they will encounter a defect in the

product. For use cases, failure is the "event" whose risk exposure we'd like to know. What you’d like to have is a way to compare the risk exposure from use case to use case so

you can work smarter, planning to spend time making those use cases with the highest risk exposure more reliable.

In the last post I talked about how to apply the concept of risk exposure to the data of your system or application. The risk exposure of an event is the likelihood that the event will

actually happen, multiplied by the expected severity of the event. Each time your customer runs a use

case, there is some chance that they will encounter a defect in the

product. For use cases, failure is the "event" whose risk exposure we'd like to know. What you’d like to have is a way to compare the risk exposure from use case to use case so

you can work smarter, planning to spend time making those use cases with the highest risk exposure more reliable.