In my last post I said one of the key goals of my book, Use Case Levels of Test, is to provide you – the tester tasked with designing tests for a system or application – a strategy for budgeting test design time wisely.

My assumption is that you are working in a software development shop where use cases have been used in-whole or in-part to specify the system or application for which you’ve been tasked to write tests, or alternatively you are familiar enough with use cases to write them as proxy tests before starting real test design.

Use cases play a key role in the “working smart” strategy presented in this book; let’s see why.

My assumption is that you are working in a software development shop where use cases have been used in-whole or in-part to specify the system or application for which you’ve been tasked to write tests, or alternatively you are familiar enough with use cases to write them as proxy tests before starting real test design.

Use cases play a key role in the “working smart” strategy presented in this book; let’s see why.

Use Cases: Compromise Between Ad Hoc And “Real Tests”

Part of the reason use cases have gained the attention they

have in testing is that they are already pretty close to what testers often

write for test cases.

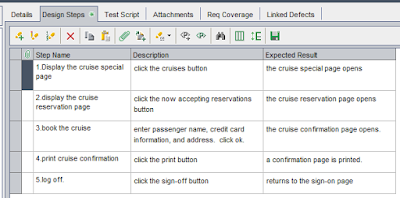

One of the leaders in the testing tool arena is HP’s Quality

Center (formerly Mercury Test Director). The example

test shown below is part of a Quality Center tutorial in which the tester is instructed that “After you add a test ... you define test steps -- detailed,

step-by-step instructions ... A step

includes the actions to be performed on your application and the expected

results”. As the example illustrates, anyone comfortable with writing use

cases would be comfortable writing tests in Quality Center, and vice versa.

|

| Anyone comfortable with writing use cases would be comfortable writing test cases in Quality Center, and vice versa |

The early availability of

use cases written by a development team gives testers a good head start on test

design. What this means for our

strategy is that, in a pinch (you’ve run out of time for test design) use cases

provide a good compromise between ad hoc testing and full blown test cases.

On the one hand, ad hoc testing carries the risks of unplanned, undocumented

(can’t peer review; not repeatable) testing that depends solely on the

improvisation of the tester to find bugs. On the other hand we have full-fledged

test cases for which even Boris Beizer, a proponent of “real testing” vs. “kiddie

testing”, has said “If every test designer had to analyze and

predict the expected behavior for every test case for every component, then

test design would

be very expensive”.

Use Case Levels of Test

Another facet of use cases key to the strategy presented in

this book is a way to decompose a big problem (say, write tests for a system) into

smaller problems (e.g. test the preconditions on a critical operation) by using use case

levels of test.

So what do I mean by use case levels of test? Most testers are familiar

with the concept of levels of test such as systems test (the testing of a whole

system, i.e. all assembled units), integration test (testing two or more units together), or unit

test (testing a unit standalone). Uses cases provide a way to decompose test

design into levels of test as well, not based on units,

but rather on increasingly finer

granularity paths through the system.

The parts of my book are organized around four levels of use

case test design. In the book I like to use the analogy of the “View from 30,000 feet”

to illustrate the role of use case levels to zoom from the big-picture (the major

interstate highways through your application) down to discrete operations that

form the steps of a use case scenario. This is illustrated in the figure below.

|

| The four use case levels of test |

Let’s start with the view of the system from 30,000 feet:

the use case diagram. At this level the system is

a set of actors, interacting with a system, described

as a set of use cases. This is the coarsest level of paths through your system;

the collection of major interstate highways of your system.

Dropping down to the 20,000-foot view we have the individual use case itself, typically viewed as a particular “happy path”[1] through the system, with associated branches, some being not so happy paths. In other words, a use case is a collection of related paths – each called a scenario -- through the system.

From there we drop to the 10,000-foot view which zooms in on a particular scenario of a use case, i.e. one path through a use case. While a scenario represents a single path through the system from a black-box perspective, different inputs to the same scenario very likely cause the underlying code to execute differently; there are multiple paths through the single scenario at the code level.

And finally, at ground level we reach discrete operations; the finest granularity action / re-action of the dance between actor and system. These are what make up the steps of a scenario. At this level paths are through the code implementing an operation. If dealing with an application or system implemented using object-oriented technology (quite likely), this could be paths through a single method on an object. A main concern at this level are the paths associated with operation failures; testing conditions under which a use case operation is intended to work correctly, and conversely the conditions under which it might fail.

The fact that use cases provide an alternate way to view

levels of test wouldn’t necessarily be all that interesting to the tester but

for a couple of important facts.

First is the fact that the levels are such that each has

certain standard black-box test design techniques that work well at that level. So

the use case levels of test provide a way to index that wealth of

black-box testing techniques; this helps answer that plea of “Just tell me

where to start!”.

Second, for each use case level of test, the “path through a

system” metaphor affords a way to prioritize where you do test design at that level, e.g. spending more time on the

paths most frequently traveled by the user or that touch critical data.

[1]

If you are unfamiliar with this term, the “happy path” of a use case is the

default scenario, or path, through the use case, generally free of exceptions

or errors; life is "happy".

No comments:

Post a Comment